Gesture Fader

Source Code

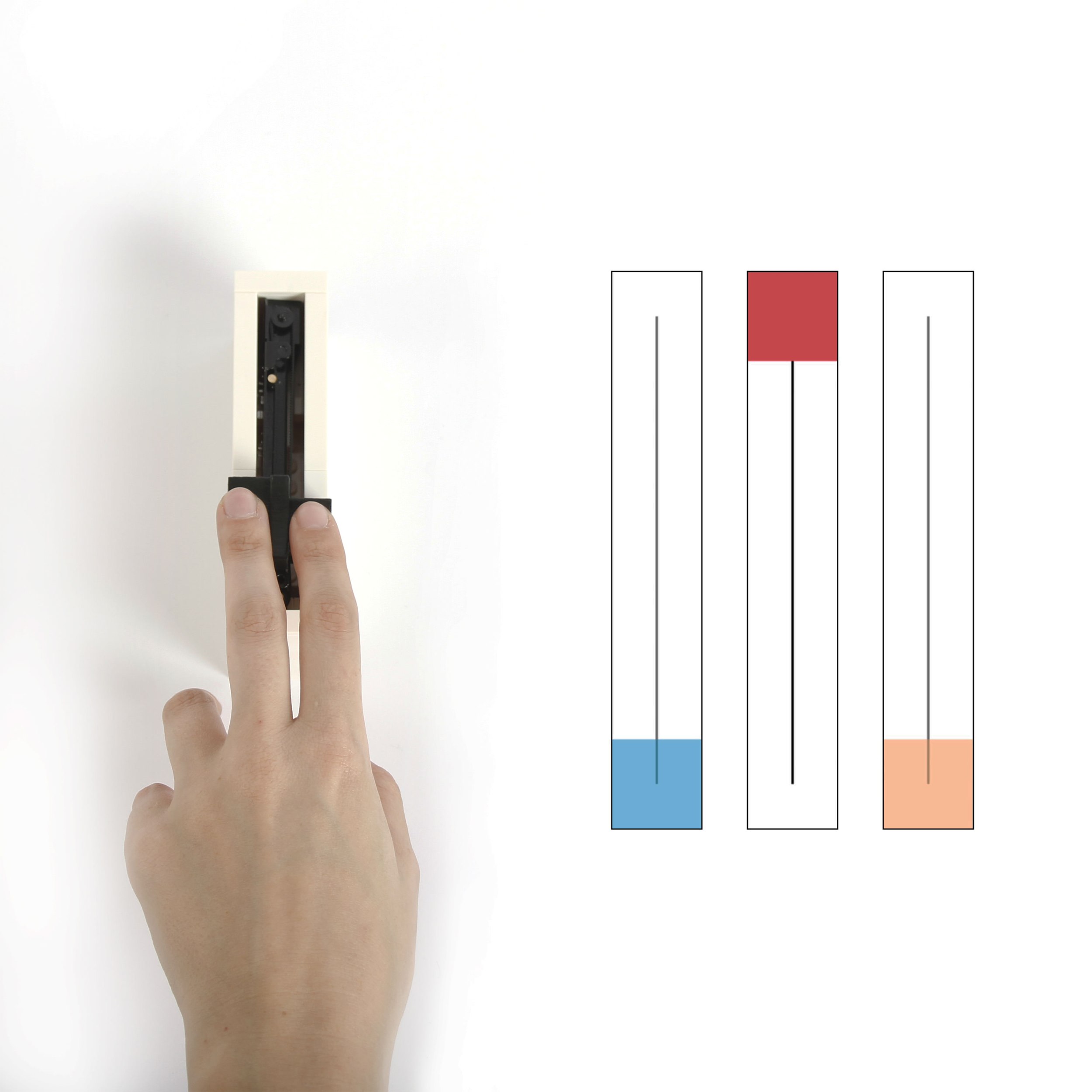

Gesture Fader in a Lego housing.

Gesture Fader with three visualized parameters.

This hardware prototype took a different approach to that taken some of the software interactions. With software I was often thinking “how can I break away from the hardware metaphor”, whereas with this I was thinking about how existing hardware could be augmented.

Swept capacitance is just making a capacitance reading at a range of frequencies, instead of just one. This gives you a curve rather than a single value, and the curves for different types of touching tend to be distinct enough to be distinguished.

This hardware prototype came from thinking about hardware sliders with what Ramesh Raskar would call an “x + y” approach. In this case the “y” was Disney’s Touché work, which uses swept capacitance to detect different gestures. I wondered if it would be possible to detect different types of touches on a fader, and how that might be useful.

Swept capacitance is just making a capacitance reading at a range of frequencies, instead of just one. This gives you a curve rather than a single value, and the curves for different types of touching tend to be distinct enough to be distinguished.

I started with a Bourns Motorized Slide Potentiometer which has a touch sensor. For the first quick prototype I used the conductive foam (that came with some electronics) as the fader cap. This gave me enough of a footprint to move the fader with more than one finger.

Having used an Arduino implementation of the Touché technique in a previous project, I had the sensing code ready to go. The test was a success and with some exaggeration of the gestures, I could distinguish between one and two fingers. To increase the distinction between different gestures I took a two pronged approach: designing a conductive fader cap that would encourage more distinct gestures, and better software classification of the sensing curves.

Hardware

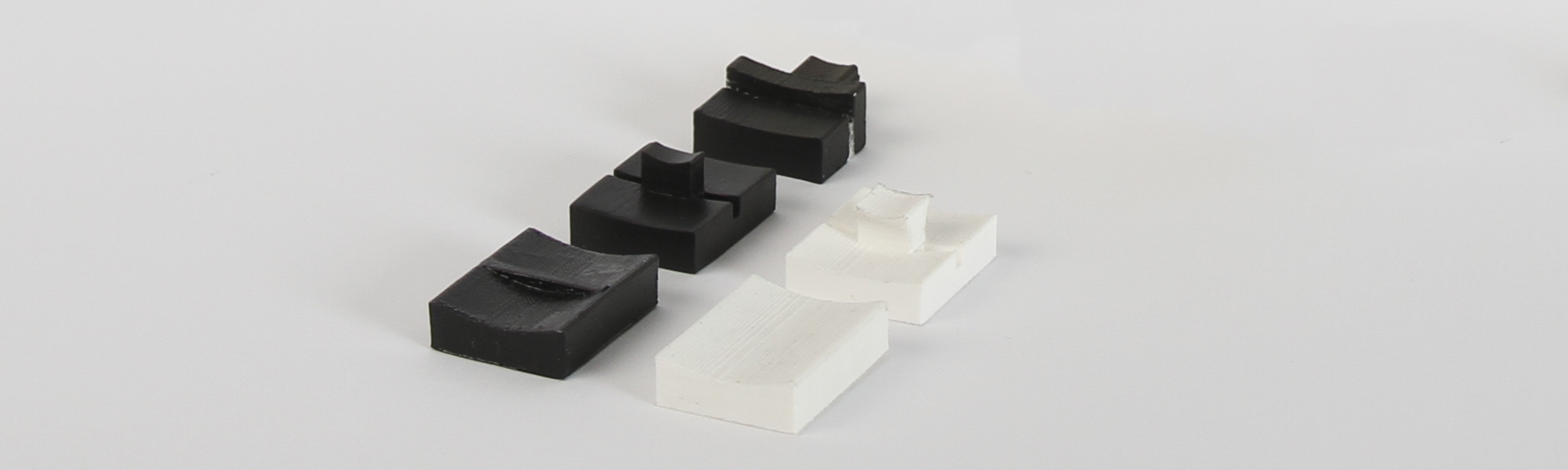

I designed and 3D printed a range of caps which encouraged the user to separate the fingers, and to have different finger print sizes. These were printed in PLA, coated in Bare Conductive Electric Paint and given a thin clear coat spray to stop the paint coming off on my fingers.

I experimented with protruding ‘crowns’ of various shapes, that would have enough purchase to move the slider, but have a small footprint. As well as non-symmetrical halves of the cap. For the demo it was enough to just divide the cap in two so that a single finger would be to one side, and not directly on top of the metal bar the cap was attached to.

Selection of fader caps.

Cap that encourages fingers to avoid the center metal bar.

Lego!

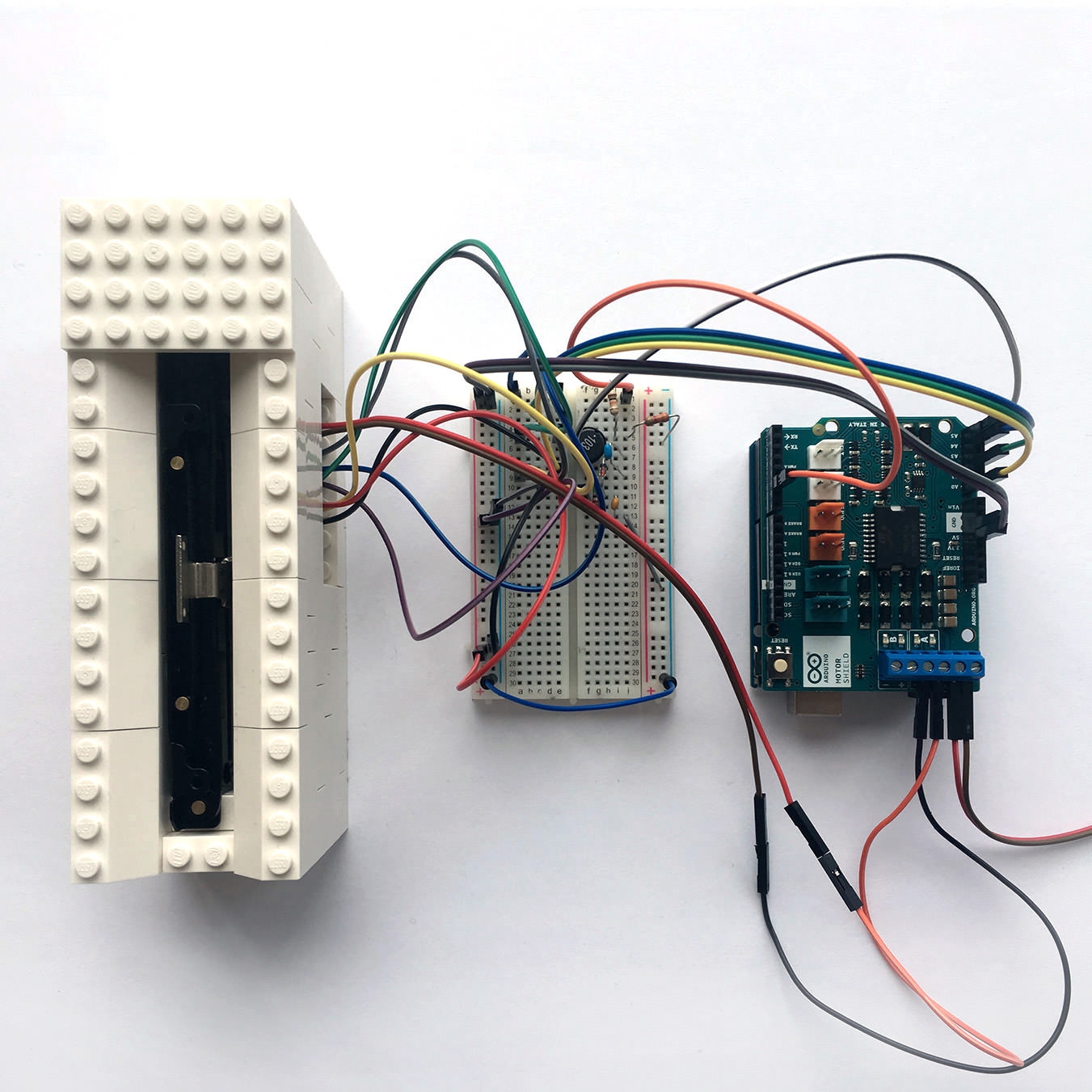

I built the housing out of Lego, using the all white Lego Architecture set. This let me build something quick and stable really quickly. Towards the end of the whole project I ended up iterating on the chunky version that’s in the video and making the slimmer, slicker version you see in the photos.

Software

To improve the classification I decided to try some lightweight machine learning. As I was using the browser canvas to do the graphics for all of the software interactions, I decided to try keeping all of the software for this in JavaScript as well. The microcontroller was communicating with Node over serial, which sent the data to the browser via a websocket. Using convnet.js, I experimented with neural net architectures and found that a small neural net (input : 6 neuron fully connected : 2 neuron fully connected : softmax) did a good job of classification. For each gesture, 50 samples of the curve data were recorded, then the network was trained for 1000 iterations. This was all done with a WebWorker, to keep the UI responsive.

Proof of concept

The video shows the proof of concept, with each gesture corresponding to a different screen fader. This really just proves the technology works, creating a platform for designing new interactions. For example you could map one finger to increments of 1 and two fingers move in increments of 10.

Project Fork: Tactile Fader

The Gesture Fader setup.

The gesture recognition requires changing the clock frequency, which caused havoc with the motor control and touch recognition timing.

This prototype was another forked project. I was originally trying to have all of the gesture recognition features and the a set of tactile features in one prototype. Not only did this become a conceptual mess, but it became too difficult to implement.

The gesture recognition requires changing the clock frequency, which caused havoc with the motor control and touch recognition timing.

Process

One of the goals of the overall How to Change a Number project was to go through the design process multiple teams. An inevitable part of the experience is discovering that other people have already done your innovative new idea. This project became an exercise in dealing with that experience. My first idea was to add context specific resistance as with the Resistant Resistor. I then found the FireFader. Rather than give up, I decided to continue to see if there could be some untapped ground.

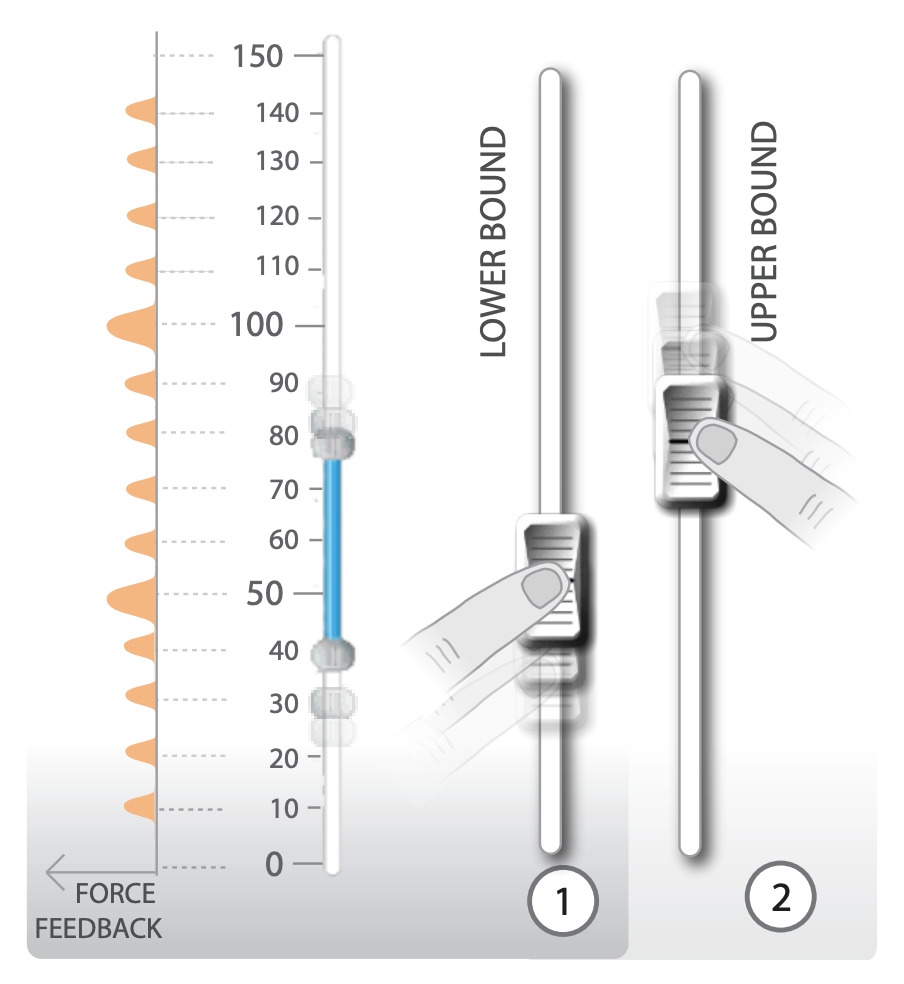

I hadn’t found the continuous resistance to be a very pleasant tactile experience. Instead I experimented with having very small haptic responses at evenly spaced ‘ticks’ along the fader’s length, implemented by moving the motor backwards and forwards a small amount. This felt much better, and could be programmed with different curves to match the underlying data, or to have bigger feedback at more significant intervals. I then discovered this paper where they had also implemented haptic ticks and a haptic illusion of a valley.

Ticks from the Force Feedback Slider

Determined that there was still something to be done in this area, I considered how the tactile feedback could be combined with gesture recognition. Because of the previously mentioned difficulty in combining the swept-capacitance gesture recognition with motor control, I decided to implement double and triple click recognition instead.

Presets

A nice unintended outcome of being able to save presets was the ability to define subranges within the slider.

The goal was to make it possible to save (and delete) useful values along the fader, which could then be found haptically. I decided on double click to save a preset and triple click to delete it.

A nice unintended outcome of being able to save presets was the ability to define subranges within the slider.

One thing to consider when deleting a preset was that some degree of tolerance needed to be used to determine when ‘on’ a threshold. The resolution of the reading meant that doing an equality check (e.g. value == 100) would often fail as it was possible to skip the value, or not land on it perfectly. The haptic feedback when hitting the saved value could also displace the fader slightly.

Implementing double and triple click at the hardware level was an interesting experience. As well as determining good values for the inter-click interval, there are also decisions to be made about when to execute the action. The decision to recognise more than one click means that you cannot execute on the final ‘down’ (except for the maximum click number), it has to either happen on the ‘up’ or after a timeout. This had implications for the feedback mechanism for when a preset had been saved or deleted.

Feedback

I wanted to keep the feedback tactile, so tried using the small backwards and forward movement used for the ticks. This wouldn’t work with the action happening on release, as the finger would be removed and wouldn’t feel it. It was also difficult to work reliably with the timeout method (where the finger would still be touching) because the displacement could then cause the motor to jam against the finger.

I ended up causing the action to trigger on release, but using an exaggerated movement which could at least be heard and seen, making it obvious that the gesture had been succesful.

Demo

Proof of concept video, showing recognition of different gestures: one finger, two fingers, and a single finger tip, each assigned to a different colour fader.