Opening the tiny window

Direct manipulation audio software interfaces

This project was undertaken during an internship at HARC with Principle Investigator Alex Warth and his group. The goal was to realise some of the ideas I'd been thinking about that were influenced by the principles of the lab (such as directness, liveness, short feedback loops) to the field of music tools.

Which one of these is hardware, and which is software?

The answer doesn’t really matter (the top one is software), they have the exact same interface. The upside of this is that knowledge is transferable between the two, learn to use one and you can use either. This is of most benefit to users who learnt to use the hardware first (who were primarily engineers), which is now a tiny proportion of the population. The first compressor most people encounter these days is in software, and they are more likely to be approaching it as a musician. For this group of users, they are being dealt a trade-off that they might not even be aware of.

What’s wrong with this interface? In hardware, not a lot. Each input control is linked to a hardware component inside; they are tactile controls that are designed to be turned by the hand, the limits of the rotary pots can be felt as they are reached. The user also gets real time visual feedback on the signal processing that is happening.

How about the software interface? Skeuomorphism! the graphic designers cry This should be redesigned! It’s true that this interface should be redesigned, but not to suit the current graphic design trend. It should be redesigned to suit the medium it exists in: the computer. At the moment it has held onto the design of the old medium (hardware). As an analogy, this is a scanned journal paper, not the explorable explanation we deserve.

Pandering to the older, diminishing audience is what my friend Jack Armitage calls “the half-life of knowledge in action, and another reason why skeuomorphs are only a short term design hack”.

How can we do better?

A simple redesign might swap out the controls that just don’t make sense on a screen, namely the rotary pots, which as GUI elements are fiddly to use with a mouse or finger. Most of these are actually controlled by dragging straight up and down, basically a Frankenstein stitching together of the visuals of a rotary pot and the interaction of a slider.

But even this isn’t much of an improvement, and barely takes advantage of the affordances of the computer.

Directness: Directly linking representations and interaction

This threshold control from Logic Pro X's Compressor has taken all of its cues from the hardware world, it shows us the current input signal, and has a completely separated control for the threshold volume. Making informed adjustments requires looking back and forth between our control and the visualisation.

The Waves L2 threshold control improves on this by putting the threshold control *on* the input signal, we can visually set our threshold directly on the audio signal: we have a more direct connection between the signal we are affecting and the controls we are using to affect it.

With a little work, this connection between representation and interaction can be improved further

Setting the threshold using a prototype dynamic range tool

Tiny windows: the problem with narrow feedback channels

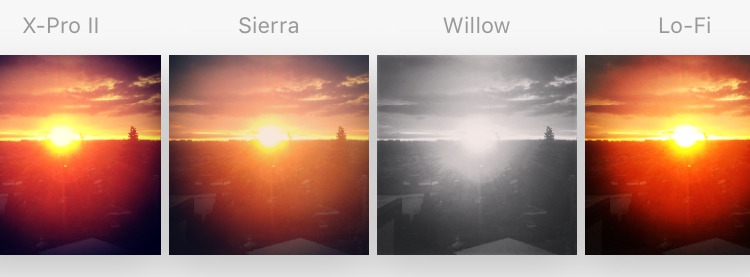

There is a bigger underlying issue: we are making decisions that will affect the whole recording based on this tiny real-time view of the world. This is like trying to decide on which filter to use on an image by shifting a tiny square preview around the image, trying to imagine what the whole thing will look like try it below:

Play with me, I'm no fun!

Obviously we should be able to see all of the images, fully filtered like this:

This isn't an entirely fair comparison, as we can't listen to multiple chunks of audio simultaneously, but the visualisation can provide a useful insight.

This isn't an entirely fair comparison, as we can't listen to multiple chunks of audio simultaneously, but the visualisation can provide a useful insight.

The obvious design

Unfortunately most audio tools today work like the first example, having to jump back and forth between sections of a track to check the effect of our adjustments.

The typical process of applying an effect to a track

Wouldn’t it be easier if we knew how the decisions we are making with our audio tools are affecting the whole recording?

The land after time: increasing the bandwidth of feedback loops

In the analogue days there was only real-time, equipment would take a signal in, mess with it and send it out. If the equipment were a person it was either a serious amnesiac or a Zen Master, only living in the now with no concept of the past or the future.

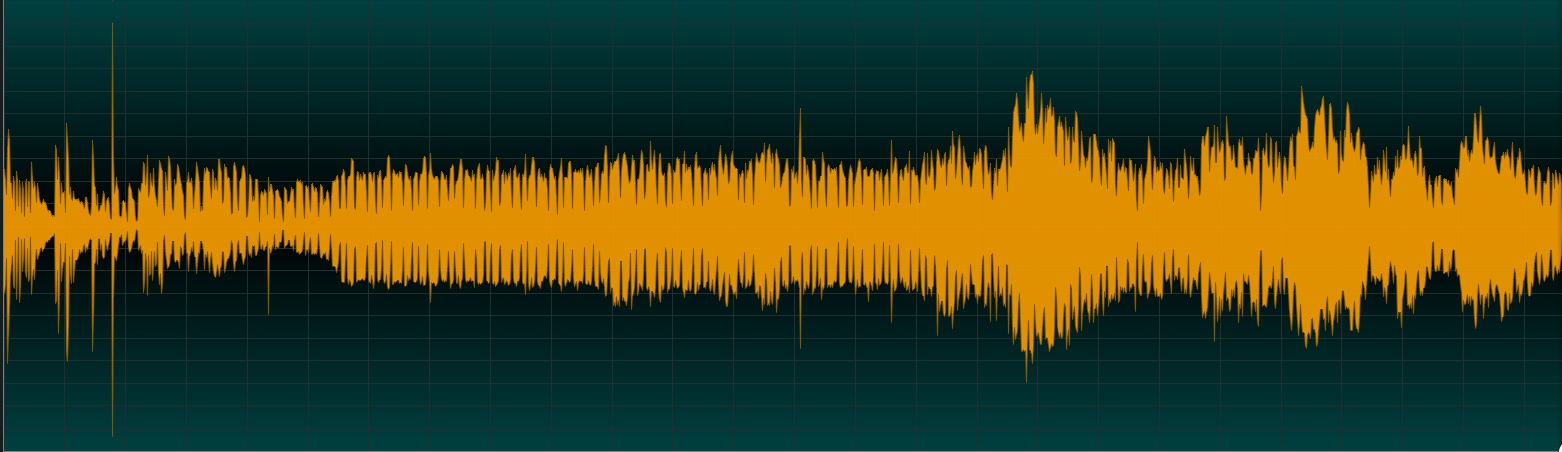

The difference is that with digital recording, our tools can know what the audio signal looks like at every point in time simultaneously: the past, present and future can be seen in parallel. There is a common visualisation that can be used for this: the waveform.

Waveforms are a graphical representation of an audio signal with time along the X axis, and amplitude along the Y axis. This is just one way of visualising audio, and the most common. This representation may not be ideal for every situation (it only displays amplitude, and has no information about pitch, harmony, melody, rhythm, noise etc…), but for the purposes of this project, where amplitude and dynamics are the primary concern, they are reasonably well suited. Waveforms are common in destructive audio editing, and as audio regions in a sequencer, but rarely seen within individual plugins.

A waveform made with ocenaudio

Sure we could record before computers, but we couldn’t really see the audio, and non-real-time work was limited to cutting up and re-assembling the tape, the equipment still processed only the audio fed into them at that moment. This is true today of software plugins, and this is somewhat reasonable (for now) as processing whole audio files is computationally expensive. However, this limitation need not be imposed on the interfaces of the plugins.

Towards a new way of working

I have been prototyping new types of interfaces for these kinds of interactions, where we interact as directly as possible with our material, and take advantage of the affordances the computer provides (project page).

For this type of interface, the main affordance to take advantage of is the ability to see the result of changes across the whole audio signal when doing something that will have an effect on the whole signal.

In this example, after making an adjustment based on the early peaks in the signal, the over-reduction of the later signal is immediately apparent and adjusted for. While we still need to listen to the audio to check our assumptions, the immediate feedback can provide an intuition as to the result of our changes.

Seeing the effect of a threshold on the whole track at once.

The same type of interaction can also be used to set the final output level, with immediate visual feedback of the amount of available headroom.

Below is a longer video demonstrating the prototype in action. The same type of interaction could be used for compressors, limiters and any other threshold based tool. For the purpose of the demonstration it is a simple hard clipper.

Further work

This is part of an ongoing project, if you find these ideas interesting you may enjoy those. The goal is to re-think many of the ways in which audio software is designed.

Get in touch

Are you a musician / pro audio company / producer / software developer / DJ / interaction designer / other? Do you find this work interesting? Have you own ideas that you want help realising? If this work interests you, or gives you strong feelings (for or against…) get in touch: arthur@arthurcarabott.com